Quality Assurance System

A Quality Assurance System is composed of multiple elements such as Analysis & Assessment Planning, Assessment Policies & Procedures, and Data Tools & Human Resources. The purpose of a quality assurance system is to demonstrate alignment to overarching standards or learning outcomes and develop an efficient, sustainable process for systematic review of assessment instruments and data for continuous improvement of program and/or the unit.

The following PowerPoint presentation was developed for a workshop at the 2017 American Association of Colleges of Teacher Education (AACTE) Quality Support Workshop – Midwest.

Conn, C., Bohan, K., & Pieper, S. (2017, August). Developing a quality assurance system and examining the validity and reliability of performance assessments. Presentation at the annual conference of the American Association of Colleges of Teacher Education (AACTE) Quality Support Workshop – Midwest, Minneapolis, MN.

Within the tabs below are a variety of resources and templates.

-

Quality Assurance System Tab Open

-

Validity Inquiry Process Tab Closed

-

Inter-rater Agreement and Calibration Strategies Tab Closed

Quality Assurance System Accordion Open

Several strategies can be used to assist with designing a comprehensive Quality Assurance System. These include:

- Conduct a high level needs analysis

- Complete an assessment audit

- Identify and implement data tools

- Develop assessment policies and procedures

- Discuss results and engage stakeholders throughout the process

Following are resources and templates related to the strategies.

- Quality Assurance System: Guiding Questions for Strategies (word)

- CAEP EPP Assessment Audit Template (pdf)

- CAEP EPP Assessment Plan – Actions Planned Timetable Template (word)

- CAEP EPP Master Assessment Plan and Calendar Template (word)

- CAEP Evidence File Template for Quantitative and Qualitative Data (word)

- CAEP Evidence File Template for Documentation (word)

- Process for Updating, Reviewing, and Reporting EPP Level Self-Study Data Files (pdf)

- Example Policy and Procedures for Systematic Review of Program Level Assessment Data (pdf)

- Biennial Report Template (pdf)

- Program Level Biennial Reports Master Chart Template (xlsx)

Validity Inquiry Process Accordion Closed

The following PowerPoint presentation provides an overview of the Validity Inquiry Process (VIP):

Conn, C., Bohan, K., & Pieper, S. (2018, April). Ensuring meaningful performance assessment results: A reflective practice model for examining validity and reliability. Presentation at the annual conference of the American Educational Research Association, New York City, New York.

Bohan, K., Conn, C. A., & Pieper, S. L. (2019). Engaging faculty in examining the validity of locally developed performance-based assessments. In K. K. Winter, H. H. Pinter, & M. K. Watson (Eds.), Performance-based assessment in 21st century teacher education (pp. 81-119). doi: 10.4018/978-1-5225-8353-0.ch004

Overview

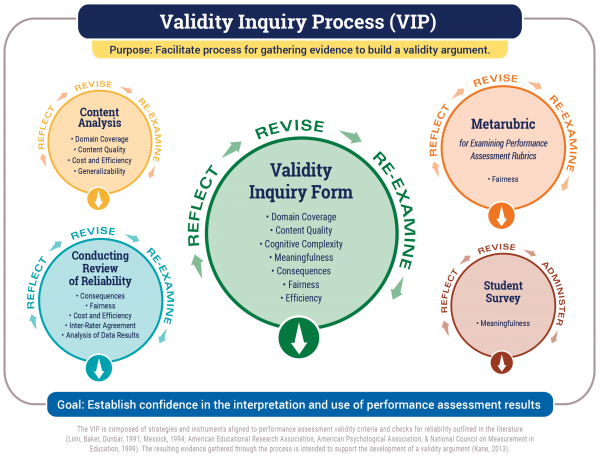

The Validity Inquiry Process (VIP) is intended to assist in gathering evidence to build a validity argument regarding performance assessment(s). The process is aligned to eight validity criteria outlined in the literature (Linn, Baker, & Dunbar, 1991; Messick, 1994): 1) Domain Coverage, 2) Content Quality, 3) Cognitive Complexity, 4) Meaningfulness, 5) Generalizability, 6) Consequences, 7) Fairness, and 8) Cost and Efficiency.

Resources

The VIP includes practical resources, including strategies and instruments, for examining locally developed performance assessments. The purpose of these strategies and instruments is to gather evidence for building a validity argument for the interpretation and use of the performance assessment results. The instruments developed to guide the validity inquiry process include:

- Content Analysis Strategies for Building a Validity Argument (pdf)

- Content Analysis: Example chart for documenting Content Quality and Generalizability, Strategies 2 and 5 (pdf)

- Validity Inquiry Form for Examining Performance Assessments (word, pdf)

- Metarubric for Examining Performance Assessment Rubrics (word, pdf)

- Student Survey: Meaningfulness of Performance Assessments (word, pdf)

- Validity Argument Template (word)

- VIP Faculty Satisfaction Survey (word, pdf)

Video Examples of the VIP Facilitated Meetings

- VIP Overview

- Purpose of Performance Assessment: Response

- Purpose of performance assessment: Discussion about communicating purpose in the assignment instructions

- Cognitive complexity: Balance across program

- Fairness: Do all students have the same opportunity to gain the knowledge and skills necessary to complete the assessment?

- Rubric descriptions: Do they distinguish different levels of the performance?

References

Kane, M. (2013). The argument-based approach to validation. School Psychology Review, 42(4), 448-457.

Linn, R. L., Baker, E. L., & Dunbar, S. B. (1991). Complex, performance-based assessment: Expectations and validation criteria. Educational Researcher, 20(8), 15-21.

Messick, S. (1994). The interplay of evidence and consequences in the validation of performance assessments. Educational Researcher, 23(2), 13-23.

Pieper, S. L. (2012, May 21). Evaluating descriptive rubrics checklist (pdf)

Do not reprint information contained or linked from this website without written permission of the authors.

Inter-rater Agreement and Calibration Strategies Accordion Closed

The inter-rater agreement and calibration strategies described on this page are a component of the Quality Assurance System (QAS) implemented by NAU Professional Education Programs (PEP). NAU PEP will continue to put in place these strategies and may make changes to the process at any time.

Inter-rater agreement training:

Important note about these files: The Excel spreadsheets contain password-protected cells. If you’d like to start from scratch and modify the files, please create a copy of the file on your computer. The Final Report PDF is a secured document to prevent editing.

Fall 2016 CAEPCon CWS Evaluator Training Inter-rater Agreement Template (Excel)

Fall 2016 CAEPCon Expert Panel Scoring Sheet for Calibration Session Template (Excel)

Graham, M., Milanowski, A., & Miller, J. (2012). Measuring and promoting inter-rater agreement of teacher and principal performance ratings. Washington, DC: Center for Educator Compensation Reform. Retrieved from https://files.eric.ed.gov/fulltext/ED532068.pdf

Stemler, S. E. (2004). A comparison of consensus, consistency, and measurement approaches to estimating interrater reliability. Practical Assessment, Research & Evaluation, 9(4), 1-11. Retrieved from https://scholarworks.umass.edu/cgi/viewcontent.cgi?article=1137&context=pare

Review slides 59-65 of the Developing a quality assurance system and examining the validity and reliability of performance assessments PowerPoint presentation.